Create GitLab pipelines dynamically - Mon, May 8, 2023

Create GitLab pipelines dynamically

Create GitLab pipelines dynamically

A few month ago I wrote about gitlab ci yaml's missing expressiveness especially the rules that govern when a job is added to a pipeline. My conclusion then was to have something like a fluent api for building the ci yaml file. Going a step further in that direction I discovered the possibility to dynamically create the ci yaml . In short: Rather then having a static yaml file this approach let’s you create pipelines and their jobs programmatically in a programming language of your choice. This blog post takes a closer look at how easy it is to do this and how it may help getting a more expressive, readable ci pipeline definition.

Why ?

The obvious question is why should I switch from writing yaml to programming ? One mayor advantage that I found is that the more complex the yaml is the harder it is to read and maintain. By programming the pipeline we can use all the means available to keep the code maintainable (e.g. linting, testing) Also GitLab’s ci yaml offers different levels of reuse (e.g. yaml anchors, aliases, overrides, includes) I think that using a programming language offers more capable reuse techniques (e.g. functions and inheritance). On last important point is that the possibilities to tailor the pipeline based on individual pipeline properties are greater and easier than in yaml. Beware though that there might be some drawbacks at which I will look later .

The (not so) secret ingredient: dynamic child pipelines

The key concept here are dynamic child pipelines . Rather than having a single pipeline, we have one pipeline consisting of the job that builds the pipeline’s yaml and a trigger job that runs the pipeline with that yaml. Here’s an example what that looks like:

stages:

- build

- run

generate-config:

stage: build

script: generate-ci-config > generated-config.yml

artifacts:

paths:

- generated-config.yml

child-pipeline:

stage: run

trigger:

include:

- artifact: generated-config.yml

job: generate-config

The tool / language you use to build the file generated-config.yml can be chosen freely. For example if your project is python centric you might

want to choose python for creating the ci yaml.

Example in python

I chose indeed python to generate the ci yaml, since it is easy to get started with and has good yaml support. As a first use case I implemented building all docker files in a repository. Here is an excerpt of the code (the complete example can be found in my gitlab project super pipelines ):

if __name__ == '__main__':

with open('./templates/base-pipeline.yml', 'r') as f:

base_pipeline = yaml.safe_load(f)

dockerfiles = [f for f in os.listdir('.') if os.path.isfile(f) and f.endswith('Dockerfile')]

for dockerfile in dockerfiles:

prefix = dockerfile.split('.')[0]

with open('./templates/build-container-job.yml', 'r') as f:

build_container = yaml.safe_load(f)

job_name = list(build_container.keys())[0]

build_job = build_container[job_name]

build_job['variables']['IMAGE_NAME'] = f"/{prefix}"

new_job_name = f"{job_name}-{prefix}"

base_pipeline[new_job_name] = build_job

print(yaml.dump(base_pipeline))

with open('pipeline.yml', 'w') as f:

yaml.dump(base_pipeline, f)

The code searches for all Dockerfiles, extracts the prefix -which is the name of the image- and adds a build job to the pipeline replacing the name of the image to be used. The template job looks like this:

build-container:

variables:

IMAGE_NAME: ""

image:

name: gcr.io/kaniko-project/executor:debug

entrypoint: [""]

stage: build

script: |-

mkdir -p /kaniko/.docker

echo "{\"auths\":{\"$CI_REGISTRY\":{\"username\":\"$CI_REGISTRY_USER\",\"password\":\"$CI_REGISTRY_PASSWORD\"}}}" > /kaniko/.docker/config.json

/kaniko/executor --context $CI_PROJECT_DIR --dockerfile $CI_PROJECT_DIR/ci.Dockerfile --destination $CI_REGISTRY_IMAGE$IMAGE_NAME:$CI_COMMIT_SHORT_SHA --destination $CI_REGISTRY_IMAGE$IMAGE_NAME:latest

It’s a valid gitlab chi job and could be reused without the python script as well. The created pipeline looks like this:

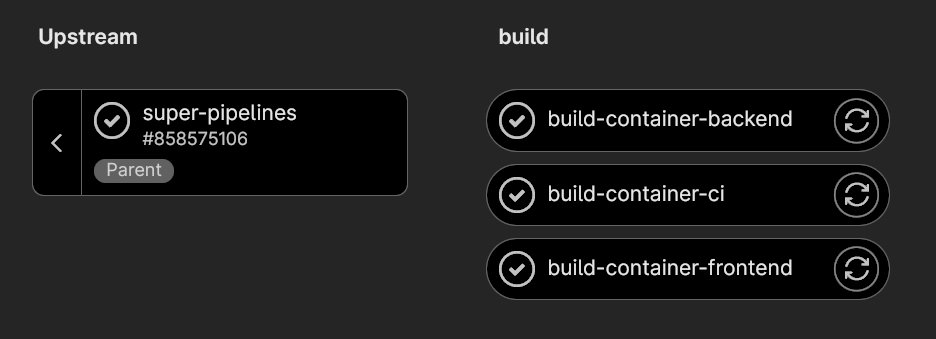

As you can see a job has been added for every docker file. Also visible is one of the drawbacks of this approach, the `upstream` pipeline, which would not

be created when using plain ci yaml for this task.

As you can see a job has been added for every docker file. Also visible is one of the drawbacks of this approach, the `upstream` pipeline, which would not

be created when using plain ci yaml for this task.

Making it even more expressive

I think the code above is more readable compared to having written this functionality as part of a single script based job or with ci yaml templates and includes. But I wanted to go a step further as outlined above and make it a fluent api. So I implemented a basic fluent api using the builder pattern . The resulting code now looks even more readable (The complete code including the builders for tha api can be found here ):

base_pipeline = PipelineBuilder().with_stage('build')

dockerfiles = [f for f in os.listdir('.') if os.path.isfile(f) and f.endswith('Dockerfile')]

for dockerfile in dockerfiles:

prefix = dockerfile.split('.')[0]

new_job_name = f"build-container-{prefix}"

job = JobBuilder.create_job().named(new_job_name).running_in_image(

'gcr.io/kaniko-project/executor:debug').using_script("build-comtainer.sh").in_stage(

'build').with_variables("IMAGE_NAME", f"/{prefix}")

base_pipeline.job(job)

print(yaml.dump(base_pipeline.get_pipeline()))

with open('pipeline.yml', 'w') as f:

yaml.dump(base_pipeline.get_pipeline(), f)

You might have noticed that I replaced the template job definition with a simple shell script which seems more fitting when using this approach.

Drawbacks and pitfalls

We have already seen one drawback; Instead of having one pipeline we have two. Thankfully GitLab’s UI makes it easy to directly jump into the child pipeline. Another pitfall might be that you just replace one tool with another one without really improving something. The code for programming the pipeline might be in the end as complicated to comprehend as the yaml. The introduction of a programming language might actually be a problem in itself since it means that people working on a project at least need basic knowledge of that programming language.

Conclusion

Switching from coding yaml to programming pipelines is most useful when ci configurations are complex and lengthy. The application of a programming language can help reduce complexity, increase reuse and readability. Using programming languages might also open up a whole new universe of resources that can be used in a pipeline definition. For example using the GitLab API.